Listen to NavamAI Podcast

Supercharge with your Personal AI¶

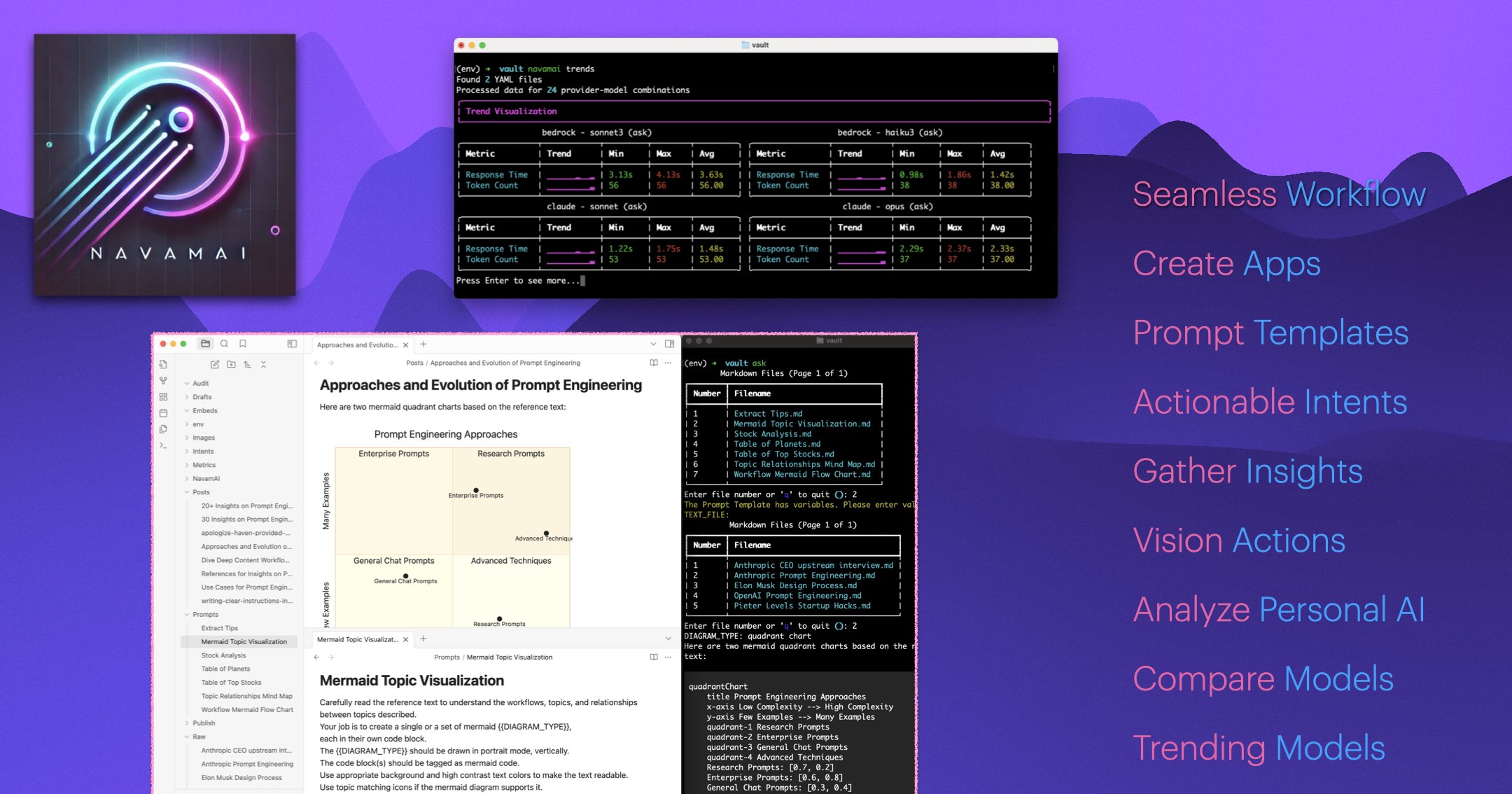

Use NavamAI to supercharge your productivity and workflow with personal, fast, and quality AI. Turn your Terminal into a configurable, interactive, and unobtrusive personal AI app. Power of 15 LLMs and 7 providers at your finger tips. Pair with workflows in Markdown, VS Code, Obsidian, and GitHub. Get productive fast with three simple commands.

Automate Markdown Workflows¶

NavamAI works with markdown content (text files with simple formatting commands). So you can use it with many popular tools like Obsidian, VS Code and static site generators like Material for MkDocs, Jekyll for GitHub Pages to quickly and seamlessly design a custom workflow that enhances your craft.

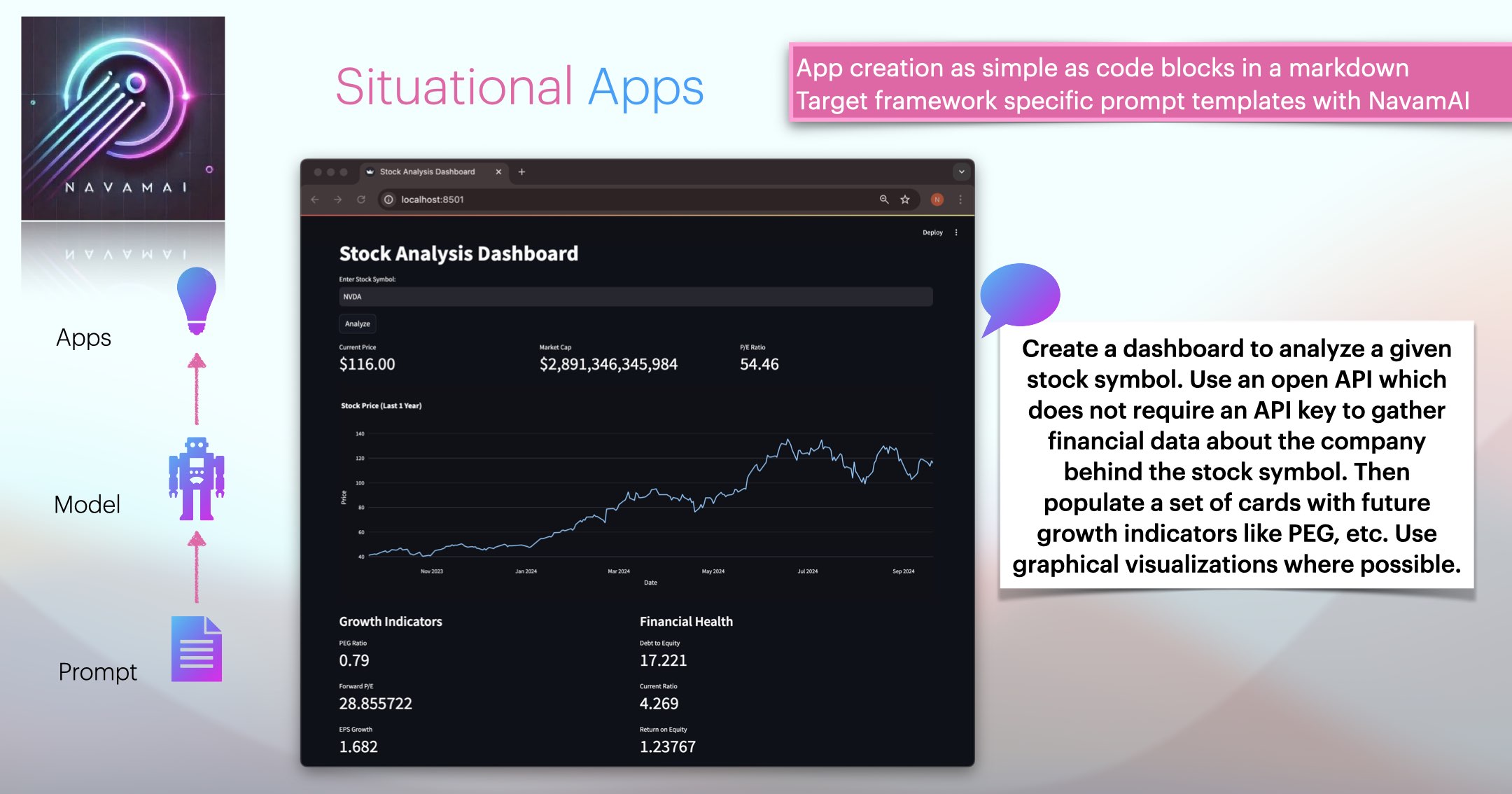

Generate Situational Apps¶

You can create, install, and run fully functional web apps with NavamAI. Situational apps on your command! Just run ask command to select the app creation prompt template. NavamAI runs the app on your laptop within seconds. You don't need to do any setup, touch a single line of code, or invest in any service.

NavamAI generates markdown blog with code blocks, sections for each file in your project, including install and run scripts. You can view and edit code within any markdown supporting tool like Obsidian to make minor changes. You can also write an Instructions heading and section to inline prompt any changes to the app and iterate using refer iterate-code command repeatedly until you get exactly the app you need. Read more about situational app generation workflow at the NavamAI Substrack.

For example, it takes only two NavamAI commands and less than a minute to create a stock analysis dashboard with live market data working in your local browser.

Benefits of NavamAI approach to app generation include:

No code setup, install, and run

- You do not have to worry about required frameworks setup or installing required dependencies of your project.

- You can change your stack by just updating it in the prompt template. Want to replace Vite with Vue? One line change in the prompt template.

Choice of models and providers

- You can experiment with any of 15 models and 7 providers to see which one meets your code gen quality and context size requirements.

- Support latest models when they are available by making simple changes to NavamAI config.

Iterative, versioned, dive into code

- You can start small and play with basic features of your app before enhancing it.

- Your code is generated as code in your designated

Appsfolder. Edit it in your favorite IDE. - Your code is also converted to a blog with code blocks so you can view and make minor changes in your Markdown editor like Obsidian.

- Support inline prompting with

refercommand to make tweaks to your code incrementally, while maintaining versions of your code blog.

Quick Start¶

Go to a folder where you want to initialize NavamAI. This could be your Obsidian vault or a VC Code project folder or even an empty folder.

pip install -U navamai # upgrade or install latest NavamAI

navamai init # copies config file, quick start samples

navamai id # identifies active provider and model

ask "How old is the oldest pyramid?" # start prompting the model

Command Is All You Need¶

So, the LLM science fans will get the pun in our tagline. It is a play on the famous paper that introduced the world to Transformer model architecture - Attention is all you need. With NavamAI a simple command via your favorite terminal or shell is all you need to bend an large or small language model to your wishes.

NavamAI provides a rich UI right there within your command prompt. No browser tabs to open, no apps to install, no context switching... just pure, simple, fast workflow. Try it with a simple command like ask "create a table of planets" and see your Terminal come to life just like a chat UI with fast streaming responses, markdown formatted tables, and even code blocks with color highlights if your prompt requires code in response!

NavamAI has released 15 commands to help customize your personal AI workflow.

*Note that navamai, ask, image, and refer are the four top level commands available to you when you install NavamAI.

| Command | Example and Description |

|---|---|

| *ask | ask "your prompt"Prompt the LLM for a fast, crisp (default up to 300 words), single turn response askBrowses the configured prompts folder, lists prompt templates for user to run. |

| audit | navamai auditAnalyze your own usage of NavamAI over time with an insightful command line dashboard and markdown report. |

| config | navamai config ask save trueEdit navamai.yml file config from command line |

| gather | navamai gather "webpage url"Cleanly scrape an article from a webpage, without the menus, sidebar, footer. Includes images. Saves as markdown formatted similar to the HTML source. Refer the scraped markdown content using refer gather command.Use vision on scraped images using navamai vision command. |

| *refer | refer text-to-extractBrowse a set of raw text files and run custom prompts to extract new structured content. refer inline-prompts-to-runRun prompts embedded inline within text to expand, change, and convert existing documents. refer intentsExpand a set of intents and prompts within an intents template refer your-commandYou can configure your own extensions to refer command by simply copying and changing any of the existing refer-existing model configs. |

| run | navamai runBrowse Code folder for markdown files with code blocks, create, setup, and run the app in one single command!The Code folder markdown files with code blocks are created using ask command running Create Vite App.md prompt template or similar. |

| id | navamai idIdentifies the current provider and model for ask commandnavamai id section-nameIdentifies the provider and model defined in specific section |

| *image | image Select a prompt template to generate an image. image "Prompt for image to generate" Prompt to generate an image. |

| init | navamai initInitialize navamai in any folder. Copies navamai.yml default config and quick start Intents and Embeds folders and files. Checks before overwriting. Use --force option to force overwrite files and folders. |

| intents | navamai intents "Financial Analysis"Interactively choose from a list of intents within a template to refer into content embeds |

| merge | navamai merge "Source File"Finds Source File updated with placeholder tags [merge here] or as custom defined in merge config, then merges the two files into Source File merged. Use along with refer inline-prompts-to-run command to reduce number of tokens processed when editing long text for context but updating only a section. |

| split | navamai split "Large File"Use this command to split a large file into chunks within a specified ratio of target model context. You can configure target model and ratio in split section of the configuration. Original file remains untouched and new files with same name and part # suffix are created. |

| test | navamai test askTests navamai command using all providers and models defined in navamai.yml config and provides a test summary.navamai test visionTest vision models. |

| trends | navamai trendsVisualize latency and token length trends based on navamai test runs for ask and vision commands across models and providers. You can trend for a period of days using --days 7 command option. |

| validate | navamai validate "Financial Analysis"Validates prior generated embeds running another model and reports the percentage difference between validated and original content. |

| vision | navamai vision -p path/to/image.png "Describe this image"Runs vision models on images from local path (-p), url (-u), or camera (-c) and responds based on prompt. |

There is no behavioral marketing or growth hacking a business can do within your command prompt. You guide your workflow the way you feel fit. Run the fastest model provider (Groq with Llama 3.1), or the most capable model right now (Sonnet 3.5 or GPT-4o), or the latest small model on your laptop (Mistral Nemo), or the model with the largest context (Gemini 1.5 Flash), you decide. Run with fast wifi or no-wifi (using local models), no constraints. Instantly search, research, Q&A to learn something or generate a set of artifacts to save for later. Switching to any of these workflows is a couple of changes in a config file or a few easy to remember commands on your terminal.

Do More With Less¶

NavamAI is very simple to use out of the box as you learn its handful of powerful commands. As you get comfortable you can customize NavamAI commands simply by changing one configuration file and align NavamAI to suit your workflow. Everything in NavamAI has sensible defaults to get started quickly.

Make It Your Own¶

When you are ready, everything is configurable and extensible including commands, models, providers, prompts, model parameters, folders, and document types. Another magical thing happens when the interface to your generative AI is a humble command prompt. You will experience a sense of being in control. In control of your workflow, your privacy, your intents, and your artifacts. You are completely in control of your personal AI workflow with NavamAI.